Avocado pesto is a deliciously creamy and fun variation on classic basil pesto. This avocado pesto recipe features ripe avocado in addition to the usual basil, Parmesan, olive oil and pine nuts (in these photos, I used pepitas instead, which are lovely as well). A squeeze of fresh lemon brightens and complements the flavors, and helps keep the avocado green.

You can use avocado pesto on pasta, of course. It makes a stellar veggie dip and sandwich spread, too. You’ll find more uses and tips for it below. The one caveat is that avocados brown with time, so it’s best used immediately or stored in a jar until you’re ready to serve.

Continue to the recipe...The post Avocado Pesto appeared first on Cookie and Kate.

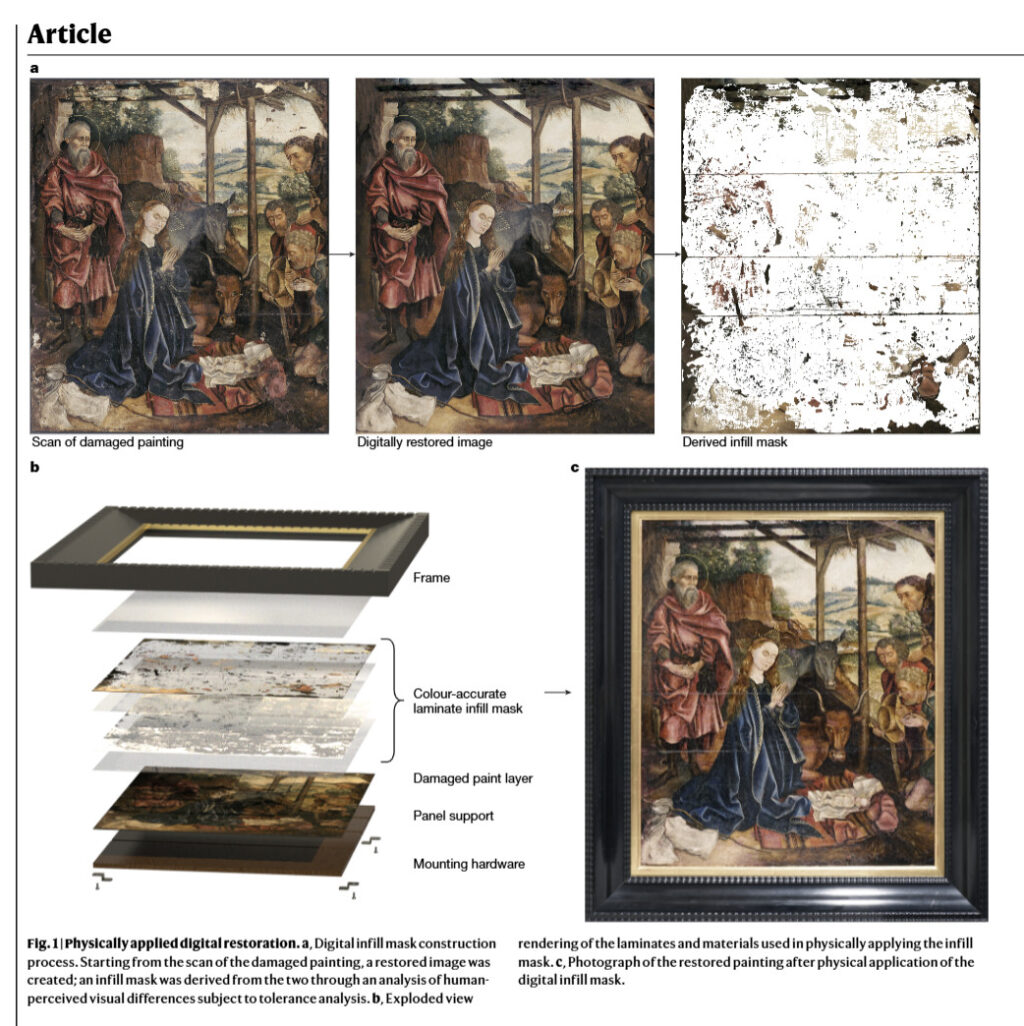

MIT graduate student Alex Kachkine once spent nine months meticulously restoring a damaged baroque Italian painting, which left him plenty of time to wonder if technology could speed things up. Last week, MIT News announced his solution: a technique that uses AI-generated polymer films to physically restore damaged paintings in hours rather than months. The research appears in Nature.

Kachkine's method works by printing a transparent "mask" containing thousands of precisely color-matched regions that conservators can apply directly to an original artwork. Unlike traditional restoration, which permanently alters the painting, these masks can reportedly be removed whenever needed. So it's a reversible process that does not permanently change a painting.

"Because there's a digital record of what mask was used, in 100 years, the next time someone is working with this, they'll have an extremely clear understanding of what was done to the painting," Kachkine told MIT News. "And that's never really been possible in conservation before."

Figure 1 from the paper.

Credit:

MIT

Figure 1 from the paper.

Credit:

MIT

Nature reports that up to 70 percent of institutional art collections remain hidden from public view due to damage—a large amount of cultural heritage sitting unseen in storage. Traditional restoration methods, where conservators painstakingly fill damaged areas one at a time while mixing exact color matches for each region, can take weeks to decades for a single painting. It's skilled work that requires both artistic talent and deep technical knowledge, but there simply aren't enough conservators to tackle the backlog.

The mechanical engineering student conceived the idea during a 2021 cross-country drive to MIT, when gallery visits revealed how much art remains hidden due to damage and restoration backlogs. As someone who restores paintings as a hobby, he understood both the problem and the potential for a technological solution.

To demonstrate his method, Kachkine chose a challenging test case: a 15th-century oil painting requiring repairs in 5,612 separate regions. An AI model identified damage patterns and generated 57,314 different colors to match the original work. The entire restoration process reportedly took 3.5 hours—about 66 times faster than traditional hand-painting methods.

Alex Kachkine, who developed the AI-printed film technique.

Credit:

MIT

Alex Kachkine, who developed the AI-printed film technique.

Credit:

MIT

Notably, Kachkine avoided using generative AI models like Stable Diffusion or the "full-area application" of generative adversarial networks (GANs) for the digital restoration step. According to the Nature paper, these models cause "spatial distortion" that would prevent proper alignment between the restored image and the damaged original.

Instead, Kachkine utilized computer vision techniques found in prior art conservation research: "cross-applied colouration" for simple damages like thin cracks, and "local partial convolution" for reconstructing low-complexity patterns. For areas of high visual complexity, such as faces, Kachkine relied on traditional conservator methods, transposing features from other works by the same artist.

From pixels to polymers

Kachkine's process begins conventionally enough, with traditional cleaning to remove any previous restoration attempts. After scanning the cleaned painting, the aforementioned algorithms analyze the image and create a virtual restoration that "predicts" what the damaged areas should look like based on the surrounding paint and the artist's style. This part isn't particularly new—museums have been creating digital restorations for years. The innovative part is what happens next.

Custom software (shared by Kachkine online) maps every region needing repair and determines the exact colors required for each spot. His software then translates that information into a two-layer polymer mask printed on thin films—one layer provides color, while a white backing layer ensures the full color spectrum reproduces accurately on the painting's surface. The two layers must align precisely to reproduce colors accurately.

High-fidelity inkjet printers produce the mask layers, which Kachkine aligns by hand and adheres to the painting using conservation-grade varnish spray. Importantly, the polymer materials dissolve in standard conservation solutions, allowing future removal of the mask without damaging the original work. Museums can also store digital files documenting every change made during restoration, creating a paper trail for future conservators.

Kachkine says that the technology doesn't replace human judgment—conservators must still guide ethical decisions about how much intervention is appropriate and whether digital predictions accurately capture the artist's original intent. "It will take a lot of deliberation about the ethical challenges involved at every stage in this process to see how can this be applied in a way that's most consistent with conservation principles," he told MIT News.

For now, the method works best with paintings that include numerous small areas of damage rather than large missing sections. In a world where AI models increasingly seem to blur the line between human- and machine-created media, it's refreshing to see a clear application of computer vision tools used as an augmentation of human skill and not as a wholesale replacement for the judgment of skilled conservators.