So far this year, electricity use in the US is up nearly 4 percent compared to the same period the year prior. That comes after decades of essentially flat use, a change that has been associated with a rapid expansion of data centers. And a lot of those data centers are being built to serve the boom in AI usage. Given that some of this rising demand is being met by increased coal use (as of May, coal's share of generation is up about 20 percent compared to the year prior), the environmental impact of AI is looking pretty bad.

But it's difficult to know for certain without access to the sorts of details that you'd only get by running a data center, such as how often the hardware is in use, and how often it's serving AI queries. So, while academics can test the power needs of individual AI models, it's hard to extrapolate that to real-world use cases.

By contrast, Google has all sorts of data available from real-world use cases. As such, its release of a new analysis of AI's environmental impact is a rare opportunity to peer a tiny bit under the hood. But the new analysis suggests that energy estimates are currently a moving target, as the company says its data shows the energy drain of a search has dropped by a factor of 33 in just the past year.

What’s in, what’s out

One of the big questions when doing these analyses is what to include. There's obviously the energy consumed by the processors when handling a request. But there's also the energy required for memory, storage, cooling, and more needed to support those processors. Beyond that, there's the energy used to manufacture all that hardware and build the facilities that house them. AIs also require a lot of energy during training, a fraction of which might be counted against any single request made to the model post-training.

Any analysis of energy use needs to make decisions about which of these factors to consider. For many of the ones that have been done in the past, various factors have been skipped largely because the people performing the analysis don't have access to the relevant data. They probably don't know how many processors need to be dedicated to a given task, much less the carbon emissions associated with producing them.

But Google has access to pretty much everything: the energy used to service a request, the hardware needed to do so, the cooling requirements, and more. And, since it's becoming standard practice to follow both Scope 2 and Scope 3 emissions that are produced due to the company's activities (either directly, through things like power generation, or indirectly through a supply chain), the company likely has access to those, as well.

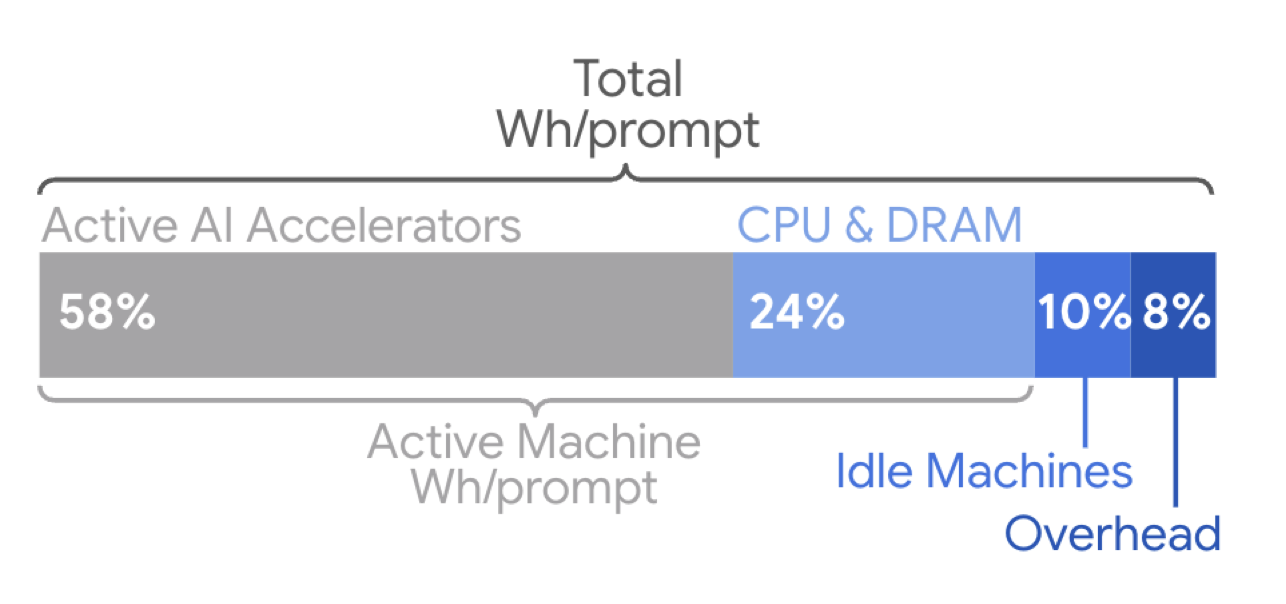

For the new analysis, Google tracks the energy of CPUs, dedicated AI accelerators, and memory, both when active on handling queries and while idling in between queries. It also follows the energy and water use of the data center as a whole and knows what else is in that data center so it can estimate the fraction that's given over to serving AI queries. It's also tracking the carbon emissions associated with the electricity supply, as well as the emissions that resulted from the production of all the hardware it's using.

Three major factors don't make the cut. One is the environmental cost of the networking capacity used to receive requests and deliver results, which will vary considerably depending on the request. The same applies to the computational load on the end-user hardware; that's going to see vast differences between someone using a gaming desktop and someone using a smartphone. The one thing that Google could have made a reasonable estimate of, but didn't, is the impact of training its models. At this point, it will clearly know the energy costs there and can probably make reasonable estimates of a trained model's useful lifetime and number of requests handled during that period. But it didn't include that in the current estimates.

To come up with typical numbers, the team that did the analysis tracked requests and the hardware that served them for a 24 hour period, as well as the idle time for that hardware. This gives them an energy per request estimate, which differs based on the model being used. For each day, they identify the median prompt and use that to calculate the environmental impact.

Going down

Using those estimates, they find that the impact of an individual text request is pretty small. "We estimate the median Gemini Apps text prompt uses 0.24 watt-hours of energy, emits 0.03 grams of carbon dioxide equivalent (gCO2e), and consumes 0.26 milliliters (or about five drops) of water," they conclude. To put that in context, they estimate that the energy use is similar to about nine seconds of TV viewing.

The bad news is that the volume of requests is undoubtedly very high. The company has chosen to execute an AI operation with every single search request, a compute demand that simply didn't exist a couple of years ago. So, while the individual impact is small, the cumulative cost is likely to be considerable.

The good news? Just a year ago, it would have been far, far worse.

Some of this is just down to circumstances. With the boom in solar power in the US and elsewhere, it has gotten easier for Google to arrange for renewable power. As a result, the carbon emissions per unit of energy consumed saw a 1.4x reduction over the past year. But the biggest wins have been on the software side, where different approaches have led to a 33x reduction in energy consumed per prompt.

Most of the energy use in serving AI requests comes from time spent in the custom accelerator chips.

Credit:

Elsworth, et. al.

Most of the energy use in serving AI requests comes from time spent in the custom accelerator chips.

Credit:

Elsworth, et. al.

The Google team describes a number of optimizations the company has made that contribute to this. One is an approach termed Mixture-of-Experts, which involves figuring out how to only activate the portion of an AI model needed to handle specific requests, which can drop computational needs by a factor of 10 to 100. They've developed a number of compact versions of their main model, which also reduce the computational load. Data center management also plays a role, as the company can make sure that any active hardware is fully utilized, while allowing the rest to stay in a low-power state.

The other thing is that Google designs its own custom AI accelerators, and it architects the software that runs on them, allowing it to optimize both sides of the hardware/software divide to operate well with each other. That's especially critical given that activity on the AI accelerators accounts for over half of the total energy use of a query. Google also has lots of experience running efficient data centers that carries over to the experience with AI.

The result of all this is that it estimates that the energy consumption of a typical text query has gone down by 33x in the last year alone. That has knock-on effects, since things like the carbon emissions associated with, say, building the hardware gets diluted out by the fact that the hardware can handle far more queries over the course of its useful lifetime.

Given these efficiency gains, it would have been easy for Google to simply use the results as a PR exercise; instead, the company has detailed its methodology and considerations in something that reads very much like an academic publication. It's taking that approach because the people behind this work would like to see others in the field adopt its approach. "We advocate for the widespread adoption of this or similarly comprehensive measurement frameworks to ensure that as the capabilities of AI advance, their environmental efficiency does as well," they conclude.

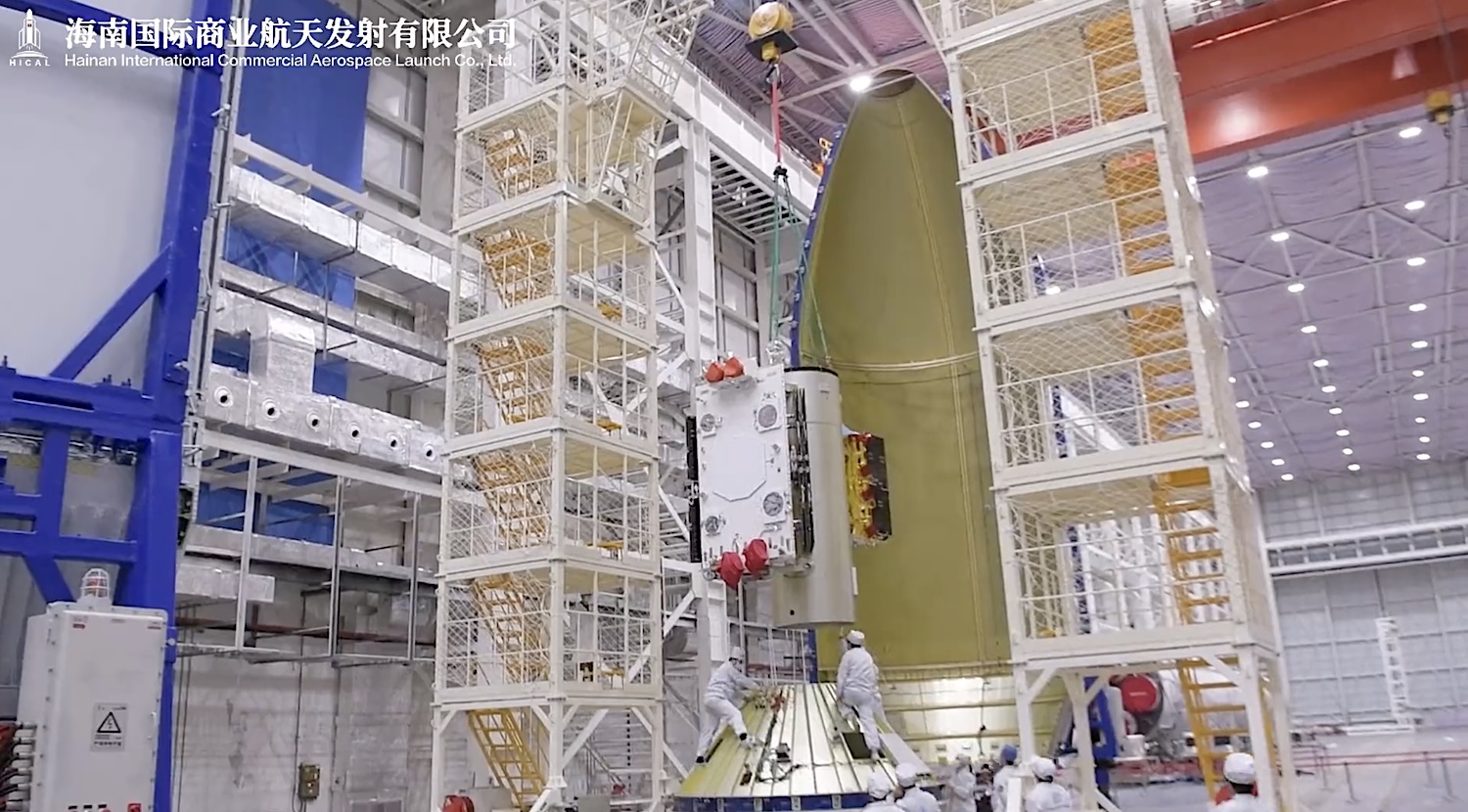

A prototype Guowang satellite is seen prepared for encapsulation inside the nose cone of a Long March 12 rocket last year. This is one of the only views of a Guowang spacecraft China has publicly released.

Credit:

Hainan International Commercial Aerospace Launch Company Ltd.

A prototype Guowang satellite is seen prepared for encapsulation inside the nose cone of a Long March 12 rocket last year. This is one of the only views of a Guowang spacecraft China has publicly released.

Credit:

Hainan International Commercial Aerospace Launch Company Ltd.

A Long March 5B rocket lifts off from the Wenchang Space Launch Site in China's Hainan Province on August 13, 2025, with a group of Guowang satellites. (Photo by Luo Yunfei/China News Service/VCG via Getty Images.)

Credit:

Luo Yunfei/China News Service/VCG via Getty Images

A Long March 5B rocket lifts off from the Wenchang Space Launch Site in China's Hainan Province on August 13, 2025, with a group of Guowang satellites. (Photo by Luo Yunfei/China News Service/VCG via Getty Images.)

Credit:

Luo Yunfei/China News Service/VCG via Getty Images

A Long March 6A carries a group of Guowang satellites into orbit on July 27, 2025, from the Taiyuan Satellite Launch Center in north China's Shanxi Province. China has used four different rocket configurations to place five groups of Guowang satellites into orbit in the last month.

Credit:

Wang Yapeng/Xinhua via Getty Images

A Long March 6A carries a group of Guowang satellites into orbit on July 27, 2025, from the Taiyuan Satellite Launch Center in north China's Shanxi Province. China has used four different rocket configurations to place five groups of Guowang satellites into orbit in the last month.

Credit:

Wang Yapeng/Xinhua via Getty Images

An aerial overview of the Starship production site in South Texas earlier this year. The sprawling Starfactory is in the center.

Credit:

SpaceX

An aerial overview of the Starship production site in South Texas earlier this year. The sprawling Starfactory is in the center.

Credit:

SpaceX

Here's a view of SpaceX's Starship production facilities, from the east side, in late February 2020.

Credit:

Eric Berger

Here's a view of SpaceX's Starship production facilities, from the east side, in late February 2020.

Credit:

Eric Berger

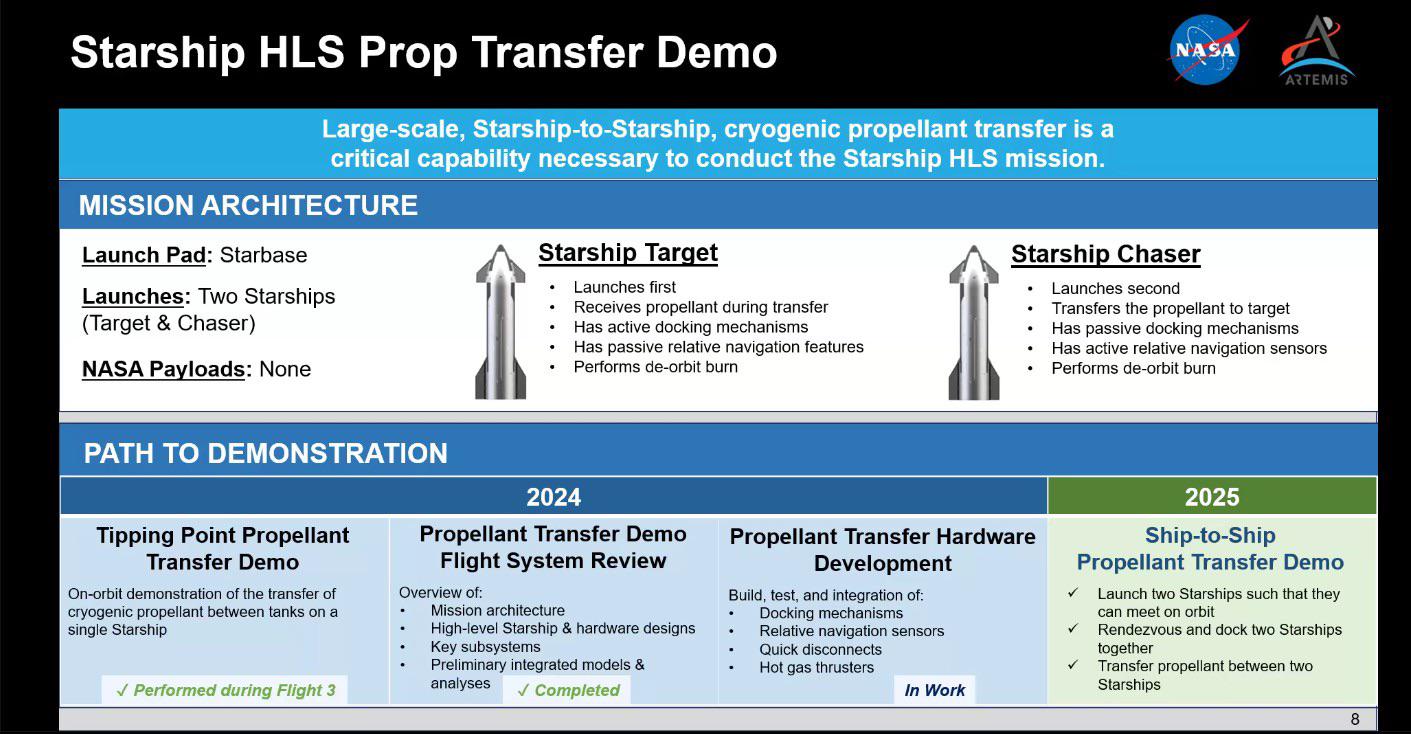

Some details about the Starship propellant transfer test, a key milestone that NASA and SpaceX had hoped to complete this year but now may tackle in 2026.

Credit:

NASA

Some details about the Starship propellant transfer test, a key milestone that NASA and SpaceX had hoped to complete this year but now may tackle in 2026.

Credit:

NASA

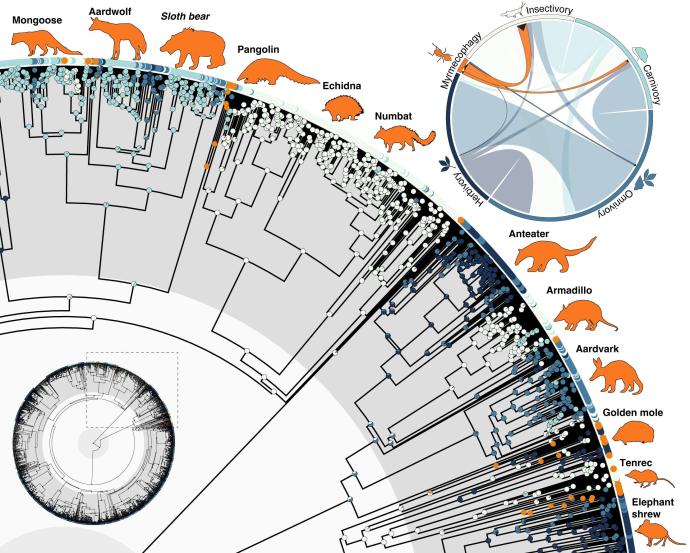

The large diagram to the left shows that ant and termite eaters are widely distributed among mammalian species. The small diagram on the right shows that, although most evolved from insect eaters, each of the major diet groups produced some antedating specialists.

Credit:

The large diagram to the left shows that ant and termite eaters are widely distributed among mammalian species. The small diagram on the right shows that, although most evolved from insect eaters, each of the major diet groups produced some antedating specialists.

Credit: